The MGM Machine Learning Workbench

When performing with gestural devices in combination with machine learning techniques, a mode of high-level interaction can be achieved. The methods of machine learning and pattern recognition can be re-appropriated to serve as a generative principle.The goal is not classification but reaction from the system in an interactive and autonomous manner. This investigation looks at how machine learning algorithms fit generative purposes and what independent behaviours they enable. To this end we describe artistic and technical developments made to leverage existing machine learning algorithms as generative devices and discuss their relevance to the field of gestural interaction.

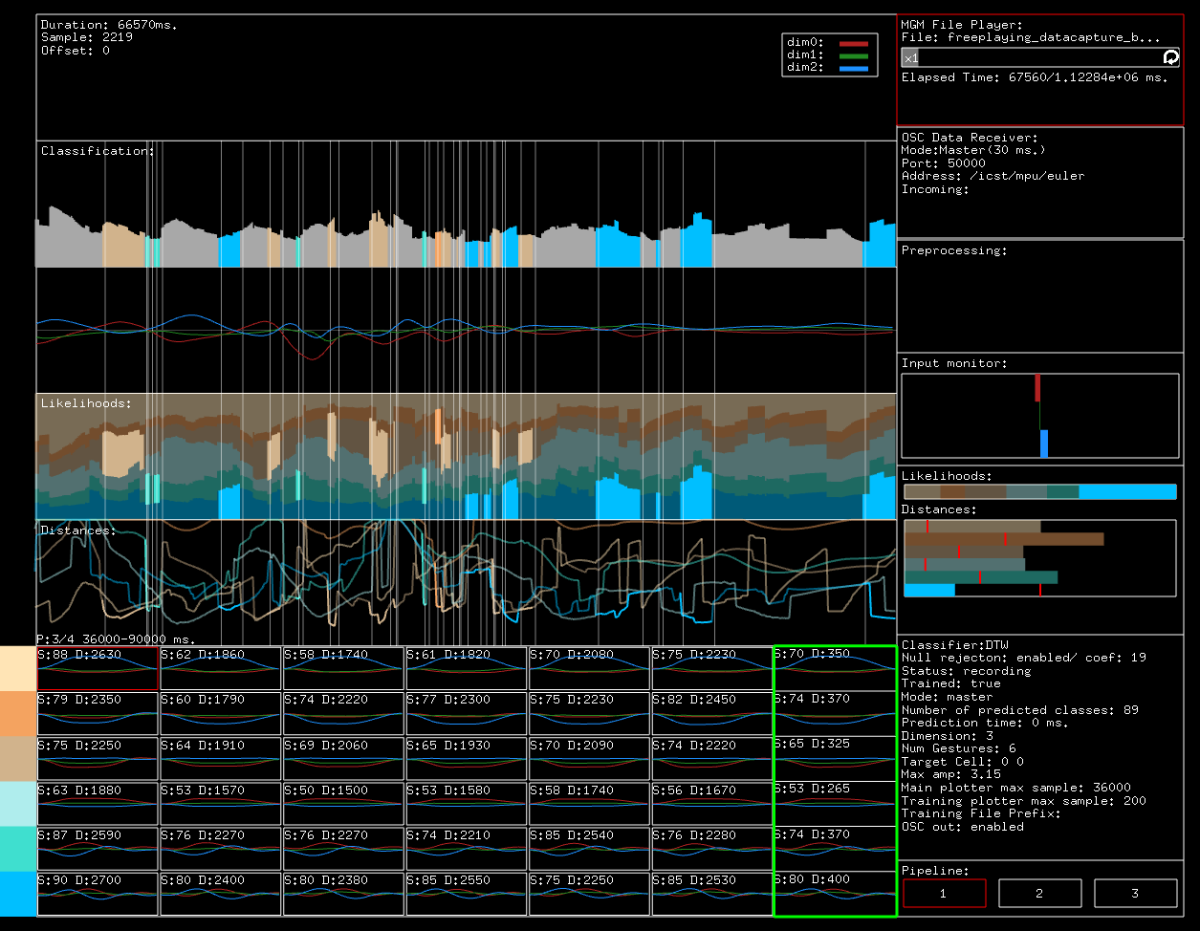

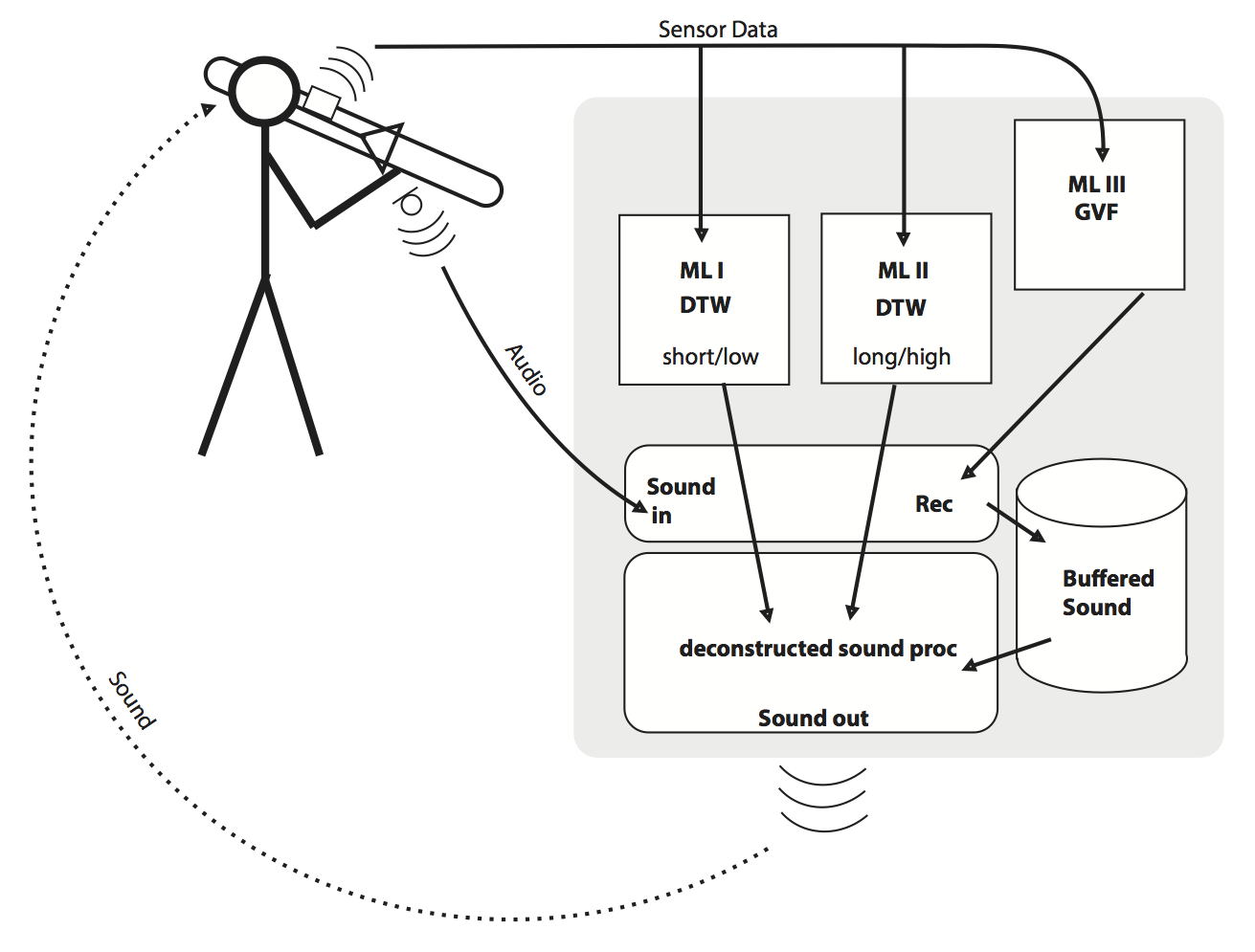

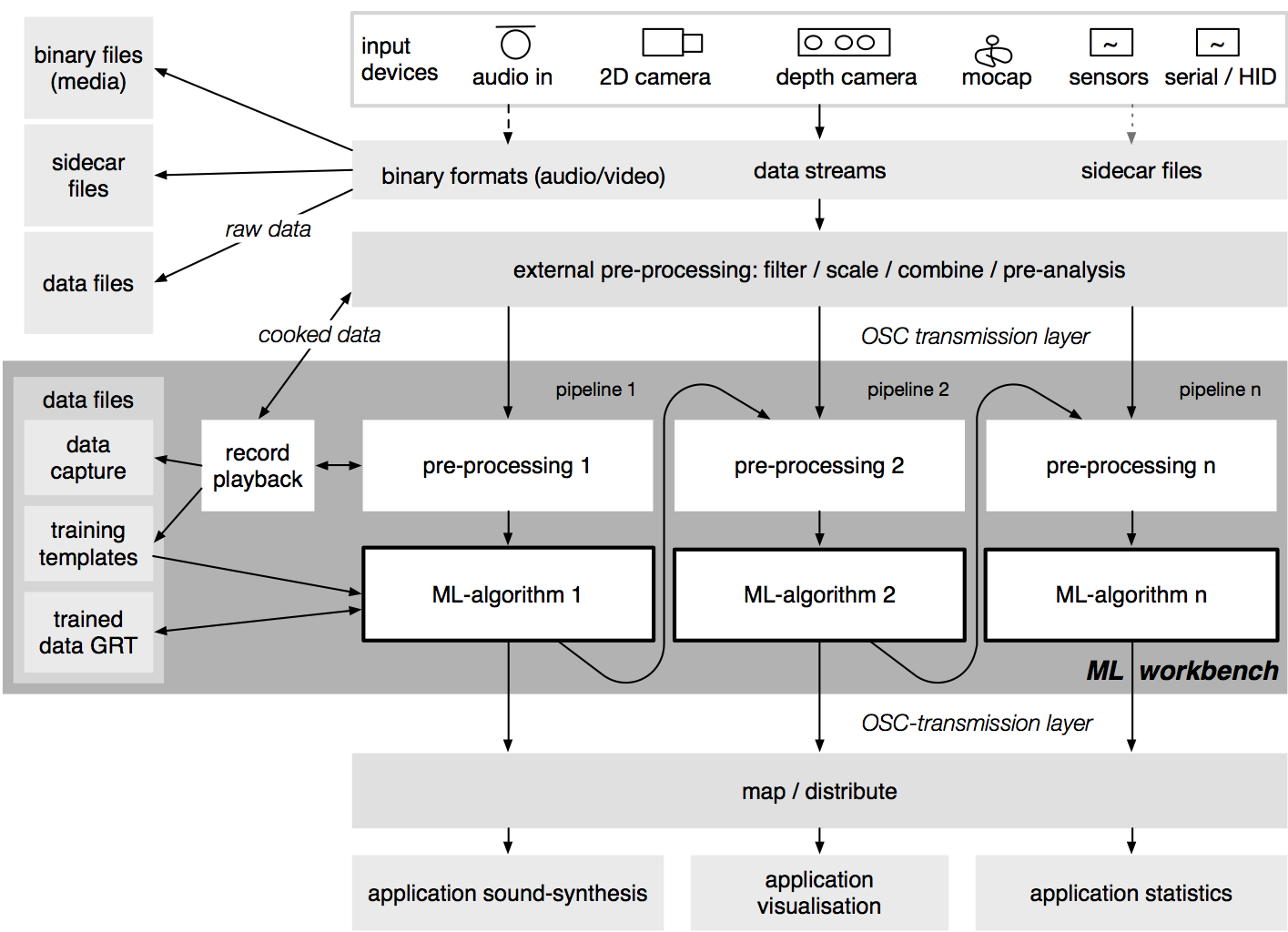

This tool implements data-recording and real-time classification of sensor-data input via OSC. In the context of movement analysis, either a pre-recorded data-stream is read into the tool from a file or data is streamed directly from another tool via OSC. The software is programmed in C++, building on the Gesture Recognition Toolkit (GRT), a machine learning library. In addition to the supervised and unsupervised classification algorithms provided by the GRT, the MLWorkbench implements the Gesture Variation Follower (GVF) as an additional classifier and will also integrate the XMMs multimodal models. Its principal application is real-time gesture-recognition, but the software structure enables the analysis of motion data with algorithms that can generate results without previous training, such a support vector machines SVM \cite{nymoen2010searching} or self organised maps SOM.

The intended model for data-analysis aims to apply machine-learning techniques to several types of data: audio-analysis, data from cameras, on-body sensors, and physiological data from both the performer \emph{and} the audience. Within this method we will explore the combination of derivatives of movement data with audio-features, with the intention of verifying the notion that where the measurements of two modalities diverge we have a moment of significant perceptual contrast.

Please see NIME 2015 paper for details on the Machine Learning Workbench

For anonymous source checkout do a: git clone http://code.zhdk.ch/git/MLWorkbench.git

The MGM Motion Visualisation and Analysis Toolset

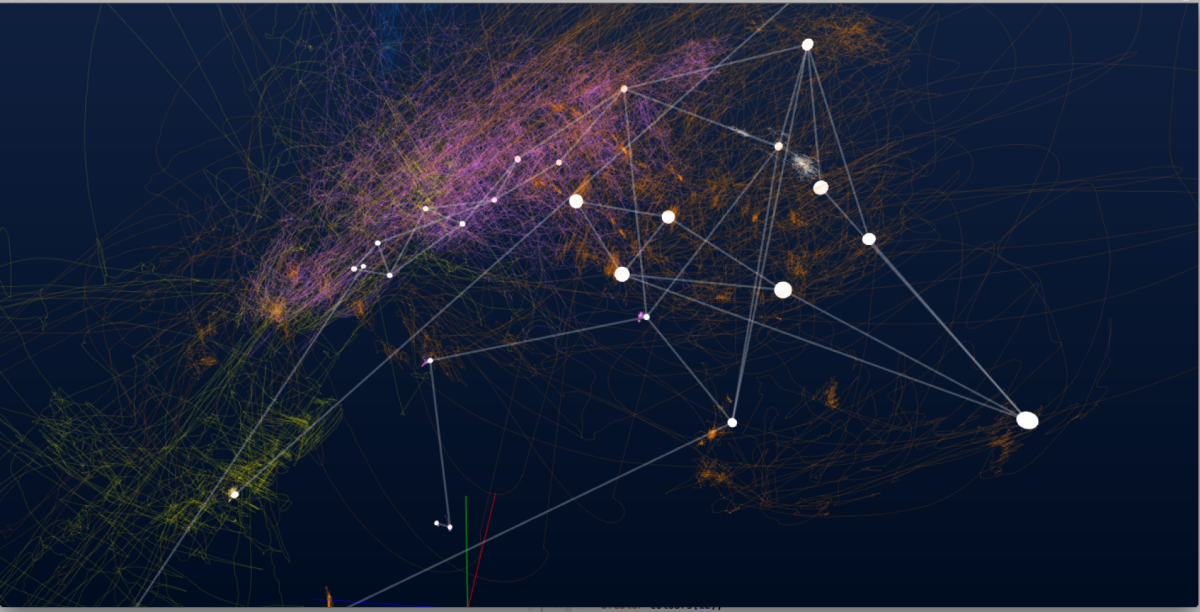

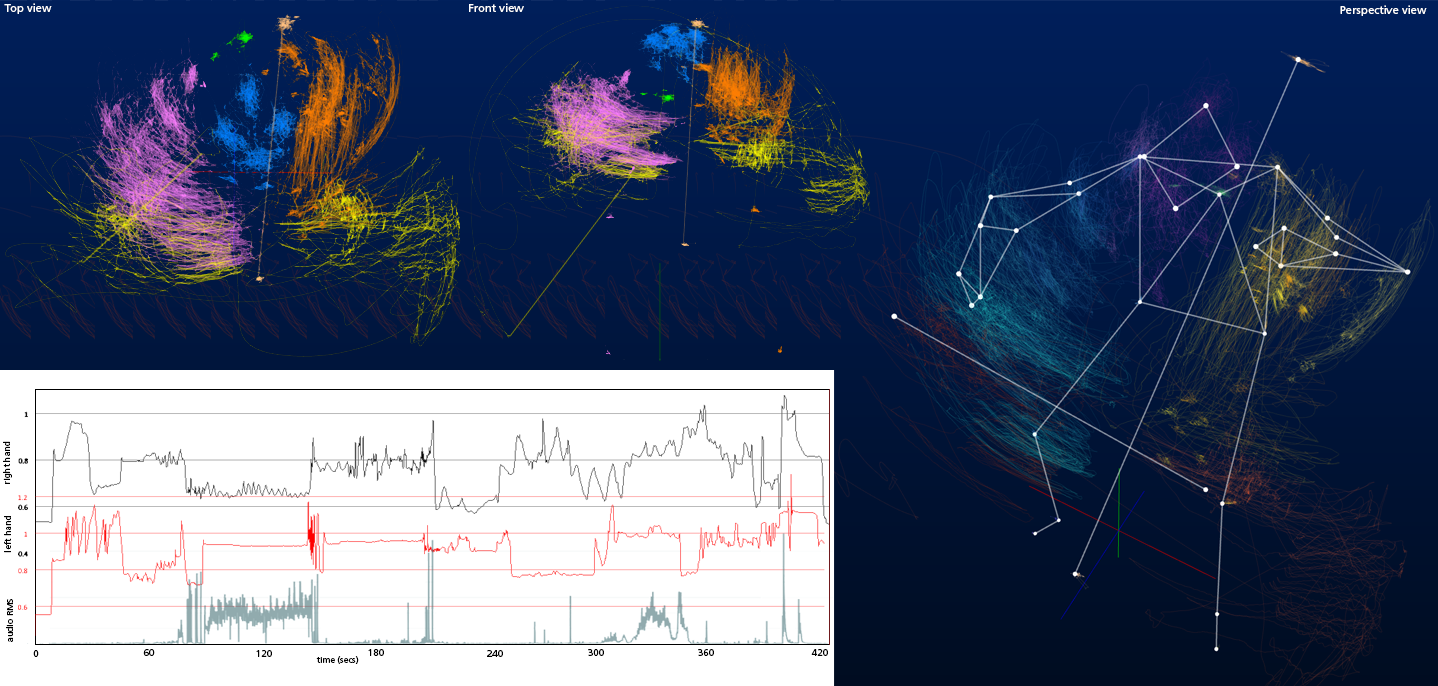

The third tool developed within the context of this project is a tool-suite for motion-capture data visualisation, treatment, and analysis. It can display the motion data in different ways and apply a number of analysis processes, such as calculation of derivatives, scaling; normalising and resampling as needed. These analysis functions are equivalent to those provided by the MoCap toolbox, permitting to plot specific perspectives of the data. On the one hand it can load motion capture datasets from files, on the other hand it can also run in real-time on streaming data coming in via OSC from the Optitrak motion capture system that we use. This way of working might be atypical and poses a number of specific challenges, not the least being the relatively high ratio of error any marker-based motion capture system produces. One of the primary uses of this software is to provide an interactive visualisation of the motion-capture data-set. It displays different elements such as markers, stick-figures, marker-trajectories in different shapes and the clustering of marker-groups that represent the limbs, the head, the bow, and the instrument (see Fig. \ref{fig:figure3} where the 2D and 3d views of the entire marker-trajectories are displayed in clusters). As a first-step towards cross-modal analysis, position information is compared to audio-features: In the lower half of Fig. \ref{fig:figure3} the vertical positions of left and right hands are set next to the RMS loudness of the audio. This shows how in `Pression’ the energy used for the instrumental action does not always map directly to the resulting sonic energy.

On the contrary, many highly active sections don’t produce much sound, whereas regular bowing sections with less activity produce much sound. An interpretation of this observation would be that this divergence indicates the presence of force, an aspect that can only be \emph{inferred} from kinematic motion data. A single bow-stroke in itself is a good model of this divergence, where the initial force-point for string excitation is followed by a uncharged, force-less adaptive phase where the string-vibration is maintained with minimal force \cite{Rasamimanana2006}.

For information on the Movement Visualisation and Analysis Tooset refer to the MoCo 2015 paper here.